Python Web Scraping Introduction

In the age of information, the internet has become a treasure trove of data. From news articles to product listings, the web is brimming with valuable information waiting to be harnessed. This is where web scraping comes into play. By using Python and powerful libraries like requests and BeautifulSoup, we can automate the process of collecting and parsing data from websites with ease.

What is Web Scraping?

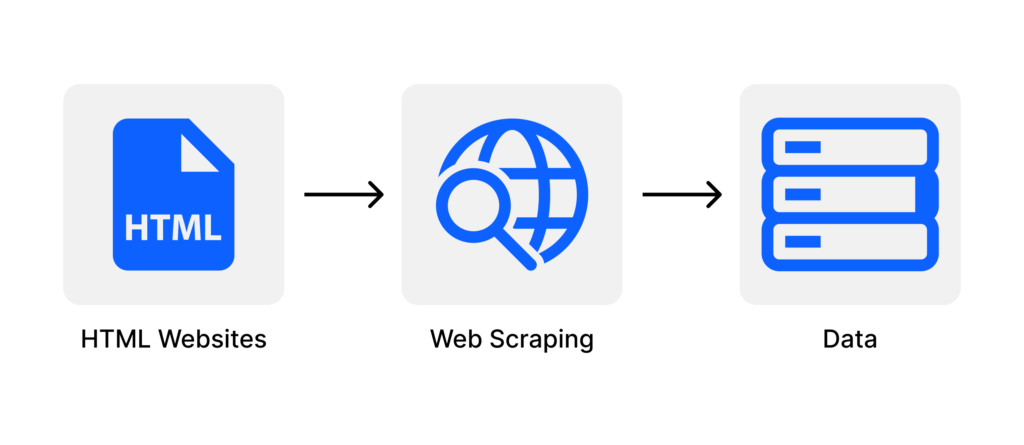

Web scraping is the technique of extracting data from websites using automated scripts or bots. It involves sending HTTP requests to specific URLs and parsing the HTML or XML responses to extract the desired information. This data can then be used for various purposes, such as data analysis, research, or business intelligence.

Web scraping has become an essential tool for many industries. Data scientists rely on it to gather large amounts of data for analysis, marketers use it to monitor competitors and gather market insights, and journalists use it for investigative reporting. The possibilities are endless.

The Python Requests Library

To make HTTP requests and interact with web pages, we can leverage the power of the Python requests library. This library provides a simple and efficient way to send GET, POST, PUT, PATCH, and HEAD requests to specific URLs. It also handles cookies, sessions, and authentication, making it a versatile tool for web scraping.

To install the requests library, you can use the following command:

pip install requests

Once installed, you can import the library and start making requests. For example, to retrieve the HTML content of a web page, you can use the get() method:

import requests

response = requests.get('https://www.example.com')

html_content = response.text

The BeautifulSoup Library

While the requests library is great for making HTTP requests, it doesn’t provide an easy way to parse HTML or XML data. This is where the BeautifulSoup library comes in. BeautifulSoup is a Python library specifically designed for extracting data from HTML and XML files.

To install BeautifulSoup, you can use the following command:

pip install beautifulsoup4

Once installed, you can import the library and create a BeautifulSoup object to parse the HTML content. For example:

from bs4 import BeautifulSoup

soup = BeautifulSoup(html_content, 'html.parser')

With the BeautifulSoup object, you can now navigate the HTML structure, search for specific elements, and extract the desired data. This makes web scraping a breeze, even for complex web pages.

Extracting Data with BeautifulSoup

Once you have a BeautifulSoup object representing the HTML content, you can start extracting data using various methods and selectors. Here are some common techniques:

Searching for Elements

BeautifulSoup provides several methods for searching and filtering elements. You can search for elements by tag name, class name, ID, attributes, and more. For example, to find all <a> tags on a page, you can use the find_all() method:

links = soup.find_all('a')

You can also use CSS selectors to find elements. For example, to find all elements with the class “article”, you can use the select() method:

articles = soup.select('.article')

Extracting Text

Once you have found the desired elements, you can extract their text using the text attribute. This will give you the inner text of the element, without any HTML tags. For example, to extract the text of a <p> tag, you can use:

paragraph_text = paragraph.text

Extracting Attributes

Elements can also have attributes such as href, src, or class. You can access these attributes using dictionary-like syntax. For example, to extract the URL of an image, you can use:

image_url = image['src']

Navigating the HTML Structure

BeautifulSoup provides methods to navigate the HTML structure, such as finding parent or sibling elements. These methods can be useful when the desired data is not directly under the current element. For example, to find the parent element of an element with the class “child”, you can use:

parent = child.parent

Best Practices for Web Scraping

While web scraping can be a powerful tool, it’s important to follow some best practices to ensure that you are scraping responsibly and ethically. Here are some guidelines:

Check the Website’s Terms of Use

Before scraping a website, always check its terms of use or robots.txt file to see if scraping is allowed. Some websites explicitly forbid scraping, while others may have specific rules or limitations.

Be Respectful and Ethical

Scraping too many pages too quickly can put a strain on a website’s server and may be considered unethical. Be mindful of the website’s resources and don’t overload the server with excessive requests.

Use Delay and Throttling

To avoid overwhelming a website with requests, it’s a good practice to introduce delays between requests. This allows the website’s server to handle other users’ requests and prevents your script from being blocked.

Handle Errors and Exceptions

Web scraping can be prone to errors, such as connection errors or missing elements. Make sure to handle these errors gracefully in your code and implement error-handling mechanisms, such as retries or error logging.

Respect Privacy and Copyright

When scraping websites, be mindful of privacy and copyright laws. Avoid scraping personal or sensitive information, and respect the copyrights of the website’s content.

Conclusion

Web scraping with Python and libraries like requests and BeautifulSoup opens up a world of possibilities for data gathering and analysis. By automating the process of extracting data from websites, we can save time and access valuable information with ease.

Remember to scrape responsibly and ethically, respecting the terms of use of the websites you scrape and being mindful of server resources. With the right techniques and best practices, web scraping can be a powerful tool for various industries and applications.

So why wait? Harness the power of web scraping with Python and unlock the wealth of information available on the web.

We hope our article “Python Web Scraping: A Quick Guide for Data Enthusiasts” was useful for you. If you want to continue your journey of discovery in the world of Python, you can take a look at the following article:

2 Comments

Thank you for your information, it was very useful to me.

Thank you 💗💗